Towards Explainable, Privacy-Preserved Human Motion Analysis

© Deligianni et al., IEEE journal of biomedical and health informatics, 2019.

Human Motion Analysis and Affect Recognition

Human motion analysis is a powerful tool to extract biomarkers for disease progression in neurological conditions, such as Parkinson disease and Alzheimer’s. Gait analysis has also revealed several indices that relate to emotional well-being. For example, increased gait speed, step length and arm swing has been related with positive emotions, whereas a low gait initiation reaction time and flexion of posture has related with negative feelings (Deligianni et al. 2019). Strong neuroscientific evidence show that the reason behind these relationships are due to an interaction between brain networks involved in gait and emotion. Therefore, it does not come to surprise that gait has been also related to mood disorders, such as depression and anxiety.

Gait is composed of many highly expressive characteristics that can be used to identify human subjects, and most solutions fail to address this, disregarding the subject's privacy. This work introduces a novel deep neural network architecture to disentangle human emotions and biometrics. In particular, we propose a cross-subject transfer learning technique for training a multi-encoder autoencoder deep neural network to learn disentangled latent representations of human motion features. By disentangling subject biometrics from the gait data, we show that the subject's privacy is preserved while the affect recognition performance outperforms traditional methods. Furthermore, we exploit Guided Grad-CAM to provide global explanations of the model's decision across gait cycles. We evaluate the effectiveness of our method to existing methods at recognizing emotions using both 3D temporal joint signals and manually extracted features. We also show that this data can easily be exploited to expose a subject's identity. Our method shows significant improvement and highlights the joints with the most significant influence across the average gait cycle.

Malek-Podjaski et al.

|

|

From Real-data to Synthetic Data and Vice-Versa

|

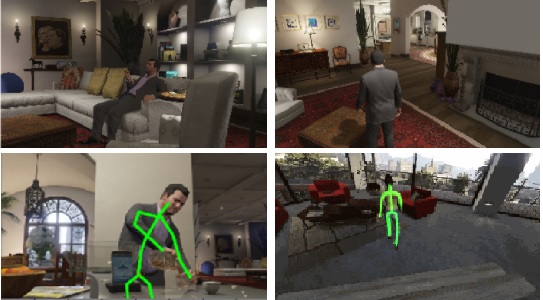

Simulation of human motion within indoor scenes based on both mocap datasets as well as photo-realistic game engines.

This approach is versatile, and it can also provide information on how to address occlusions and multi-people scenarios in a social context.

Furthermore, it can create unlimited human motion datasets along with their ground truth, significantly improving the ability to design deep learning models.

© M. Malek-Podjaski and F. Deligianni

|

Funding and Collaborations

Related Publications

M. Malek-Podjaski, F. Deligianni, 'Towards Explainable, Privacy-Preserved Human-Motion Affect Recognition’, at IEEE SSCI, 2021.

I. Domingos, G.Z. Yang and F. Deligianni, Intention Detection of Gait Adaptation in Natural Settings’, IEEE Symposium Series on Computational Intelligence, 2021.

X. Gu, Y. Guo, F. Deligianni, B. Lo and G.-Z. Yang, Cross-subject and cross-modal transfer for generalized abnormal gait pattern recognition, IEEE Transactions on Neural Networks and Learning Systems, 2020.

F. Deligianni, Y. Guo and G.-Z. Yang, From Emotions to Mood Disorders: A Survey on Gait Analysis Methodology, IEEE Journal of Biomedical and Health Informatics, 23(6), 2019.

Y. Guo, F Deligianni, X. Gu, GZ. Yang, ‘3-D Canonical pose estimation and abnormal gait recognition with a single RGB-D camera’, IEEE Robotics and Automation Letters, 2019.

X. Gu, Y Guo, F. Deligianni, GZ. Yang, ‘Coupled Real-Synthetic Domain Adaptation for Real-World Deep Depth Enhancement.’, IEEE Transactions on Image Processing, 2020.

Y. Ma, H. M. Paterson, and F. E. Pollick, 'A motion capture library for the study of identity, gender, and emotion perception from biological motion,’ Behavior Research Methods, vol. 38, no. 1, pp. 134–141, 2006.

|